Ryan Yen

HCI Researcher / Developer

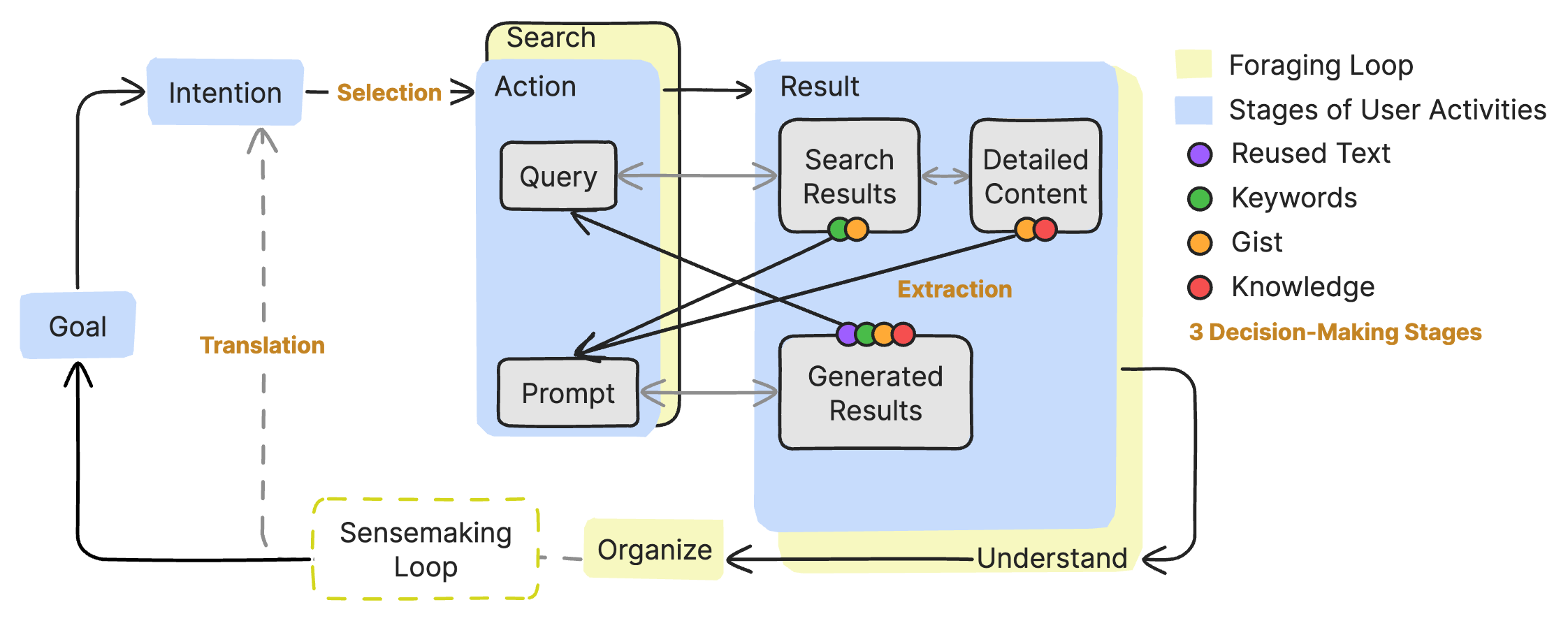

Human-Computer Interactions/ Computational Theory Systems/ Programming Interface

I am currently a Human-Computer Researcher. In the past, I was a full-stack developer, and I aspire to become an entrepreneur in the future. My present research interest are malleable programming interface & interaction.

I will pursue my PhD at MIT CSAIL under the supervision of Dr. Arvind Satyanarayan to continue my research in programming interface. I studied master in Computer Science at the University of Waterloo, mentored by Dr. Jian Zhao of the WatVis Lab at UWaterloo. I was guided by Dr. Zhicong Lu from DEER Lab and Dr. Can Liu of the ERFI Lab at CityU HK.

Current State

I'm actively looking for a research internship position in the summer of 2025, working on the topic of malleable programming interface.

- Research Interest

- Hobbies Violin/ Swimming/ Board Game

Latest News

Invited talk at Tableau Research2024-11-12

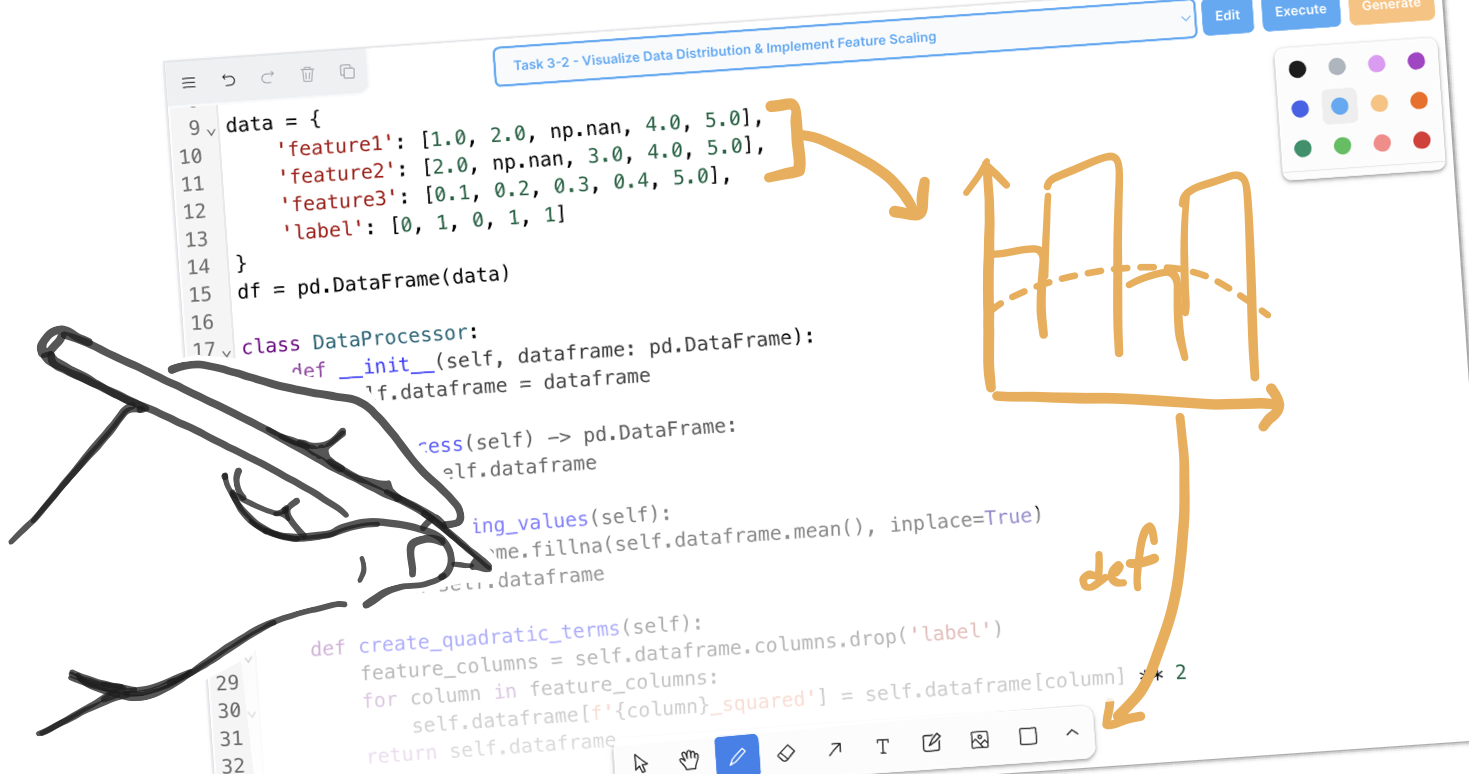

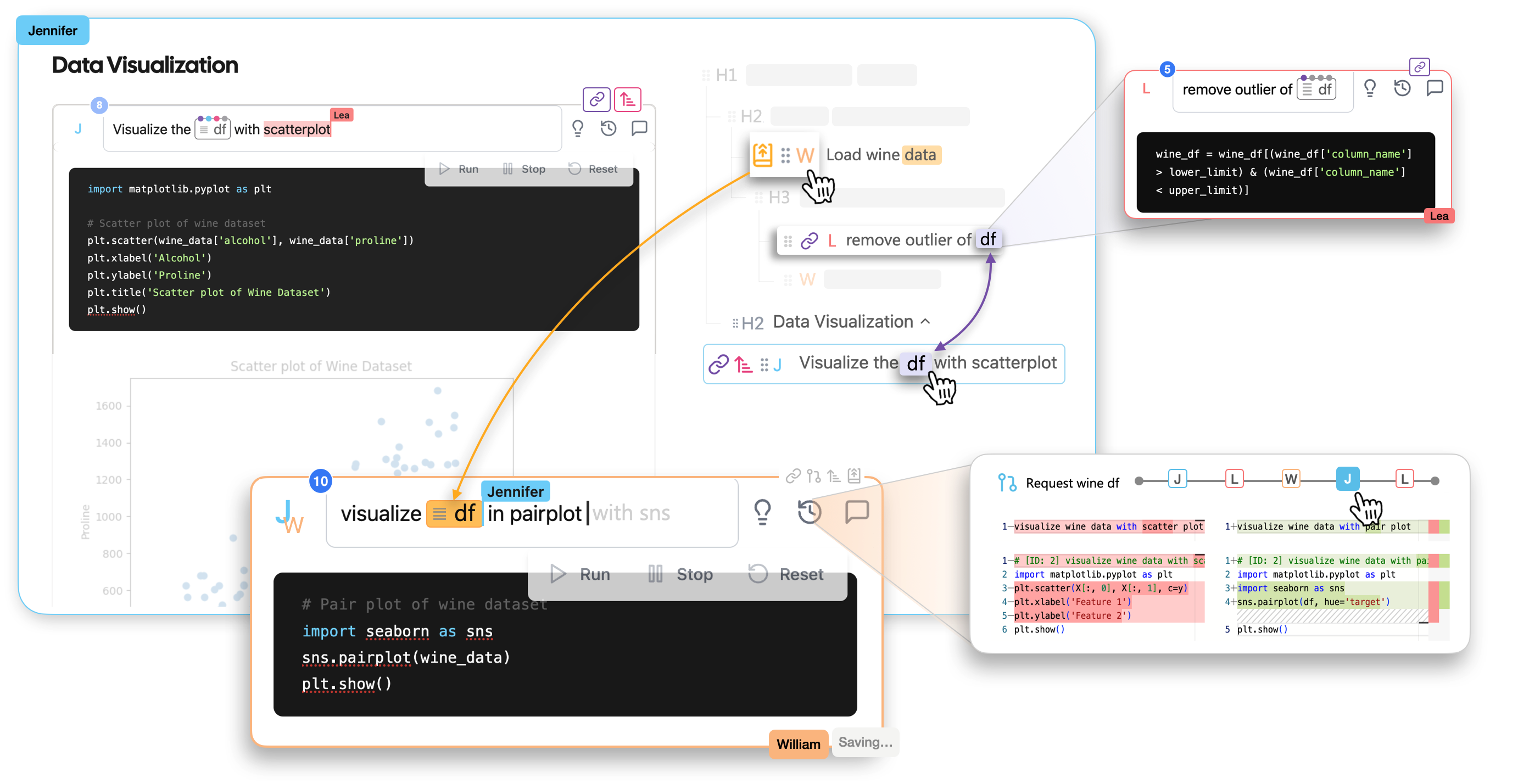

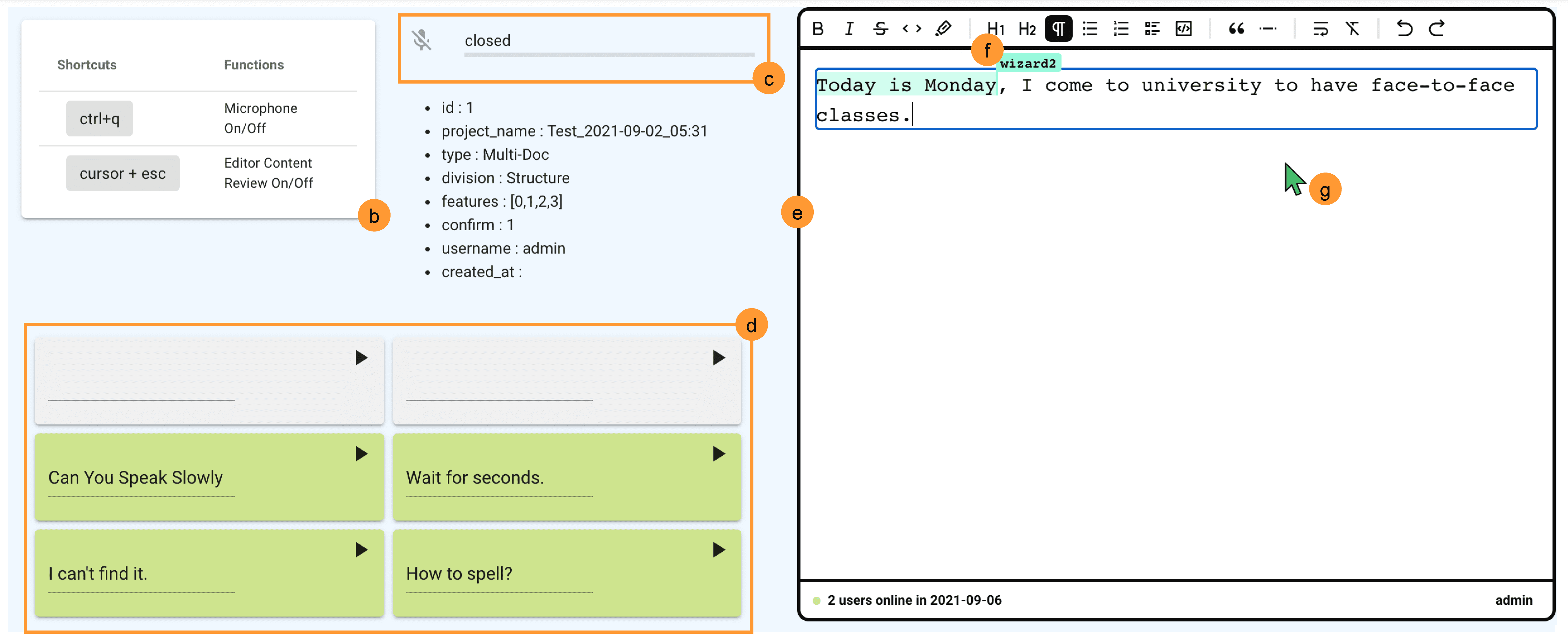

I was honored to give a talk at Tableau Research on the topic of malleable interaction interface for programming. I shared my research on the design of a system that enables programmers to edit code dynamically.

3 papers + 1 poster at UIST24! 🌆 2024-07-31

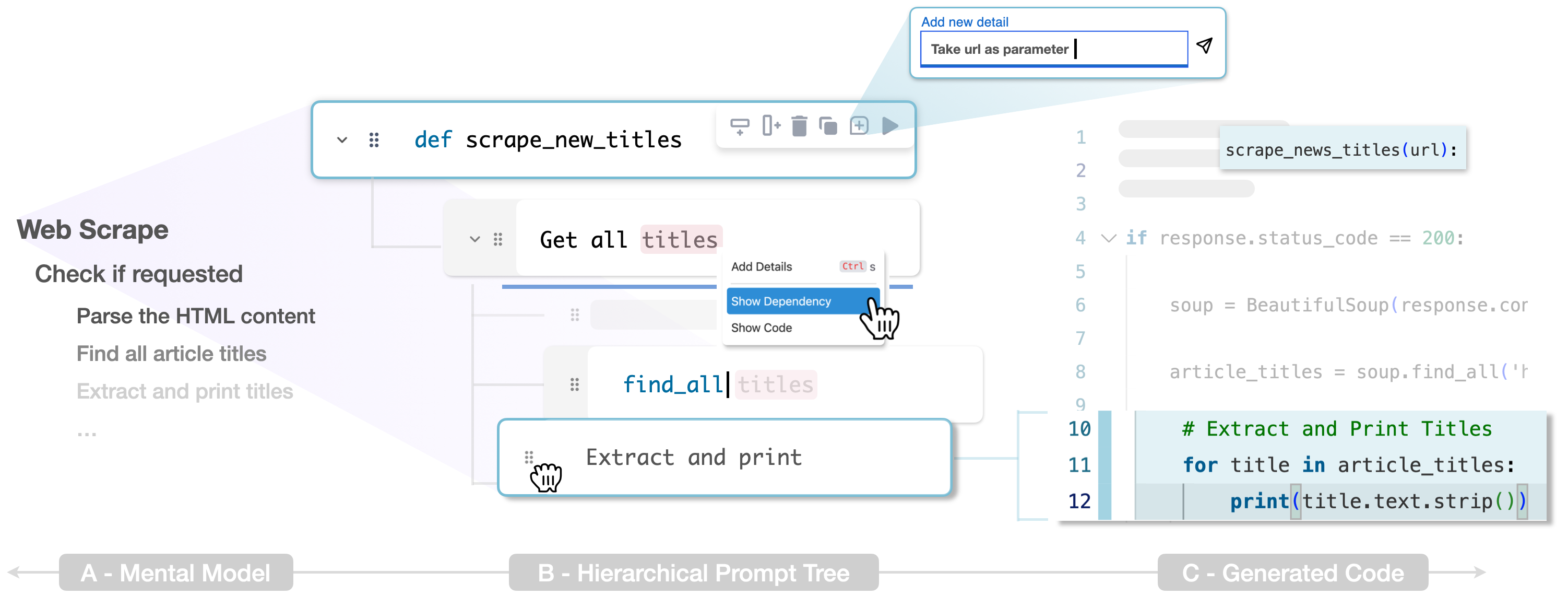

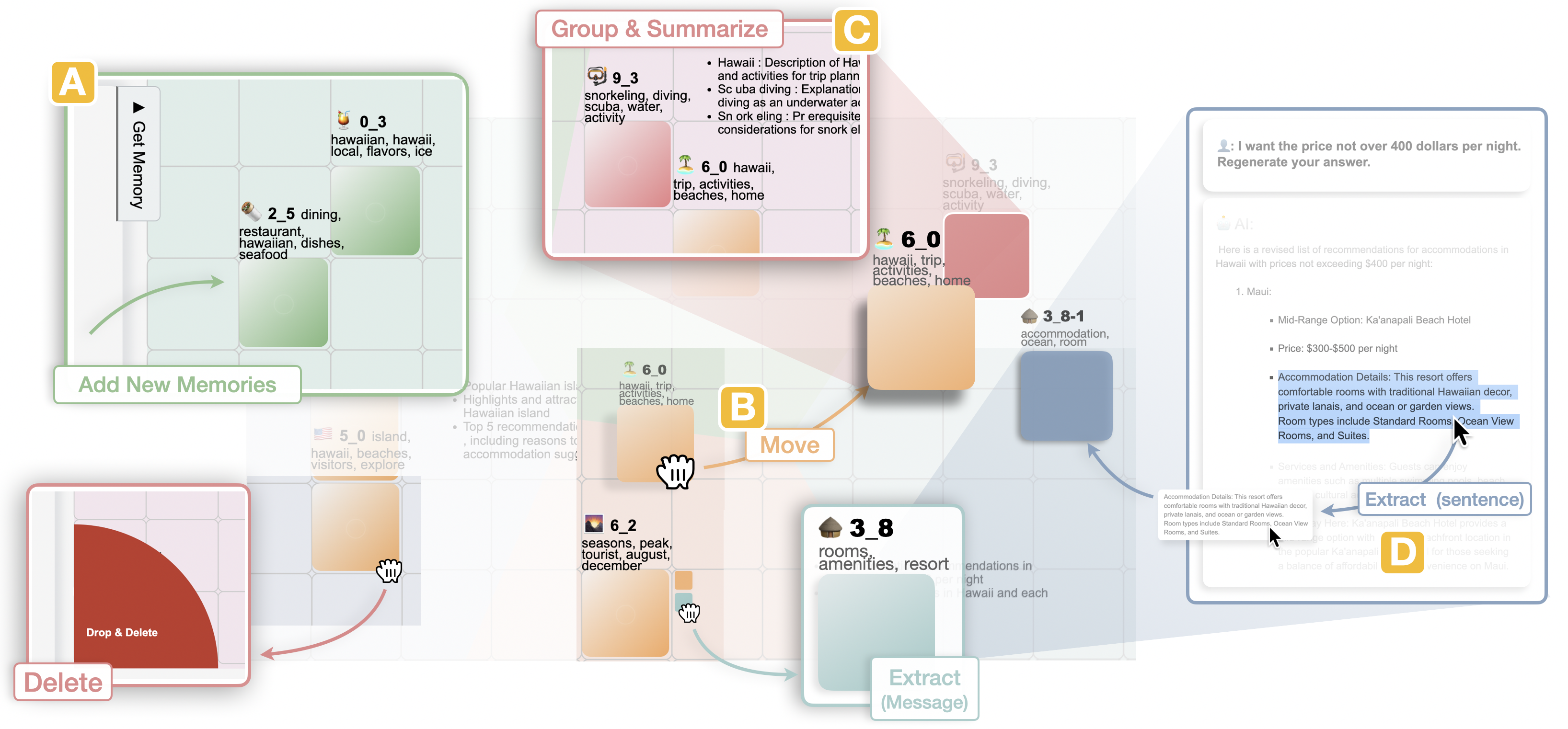

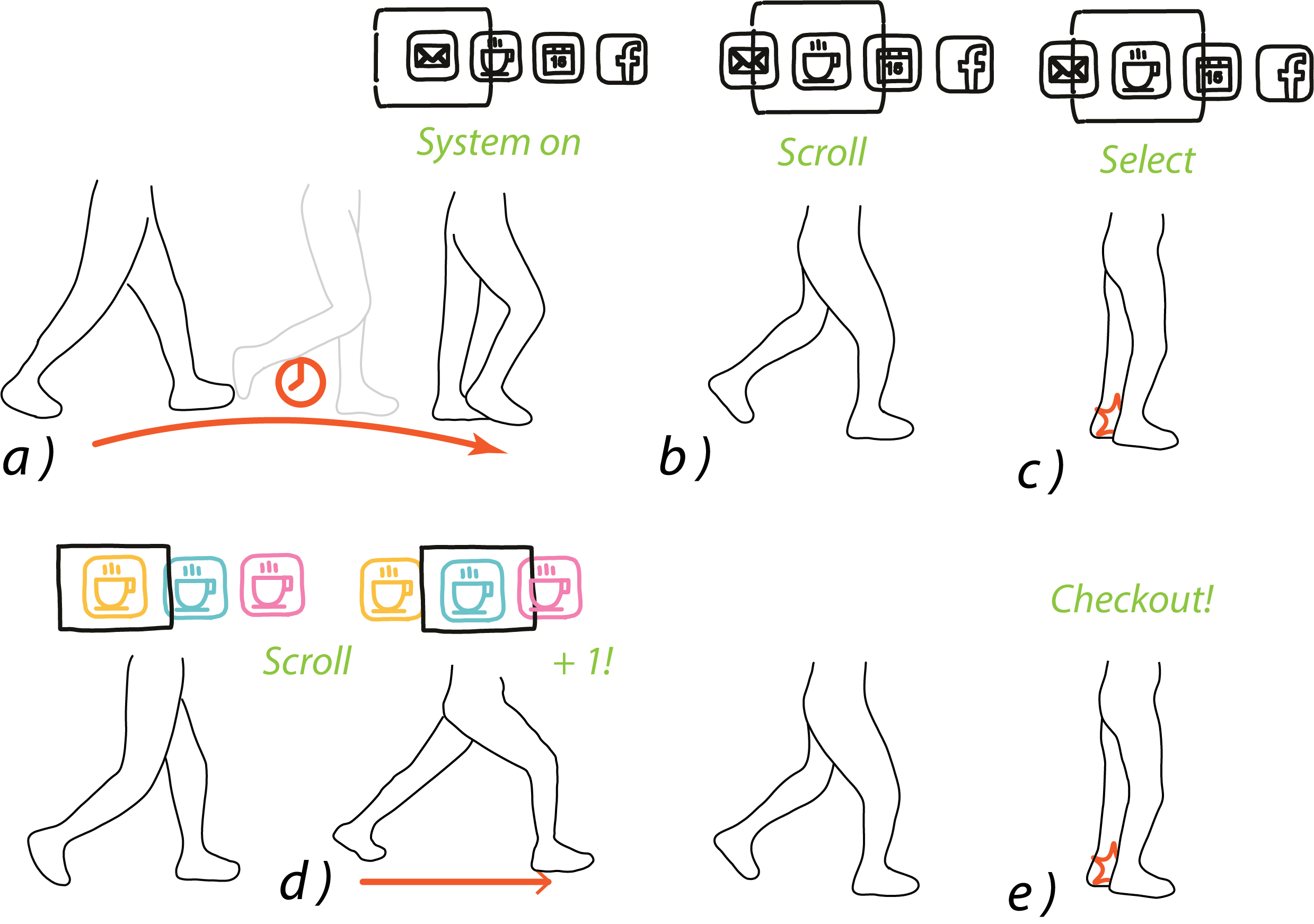

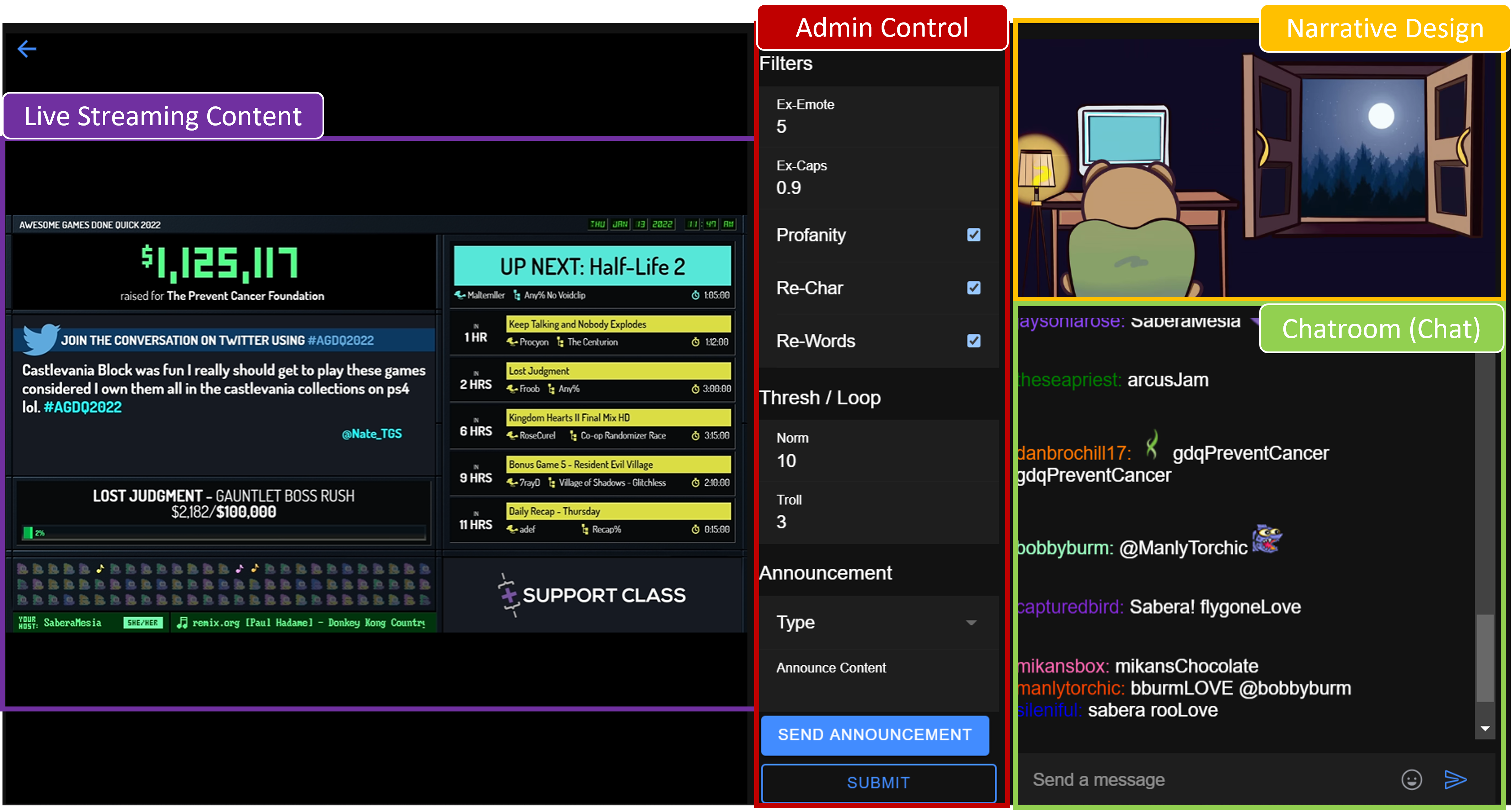

CoLadder, Memolet and a paper collborated with ChingYi on eliciting walking gestures for AR.

1 Paper + 1LBW at CHI24! 🌺🏄 2024-02-06

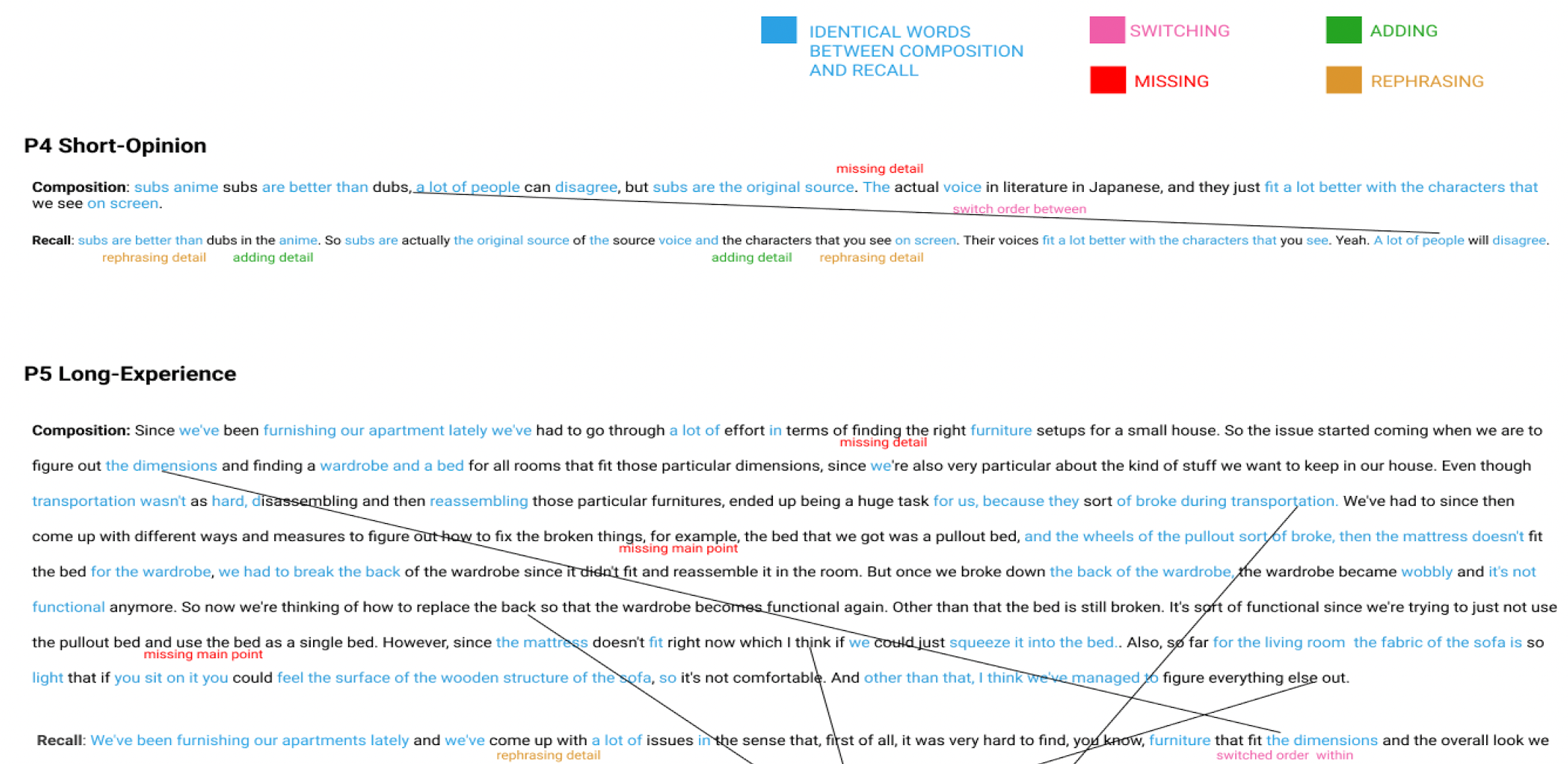

We explored the workflow of collaborative natural language programming and designed a system to support prompt sharing and referring.